Masterclass: Sourcing and DD on Our Investment in Groq

The AI Infrastructure Revolution You Didn't See Coming

At Alumni Ventures (AV), we don’t just follow market trends — we strive to anticipate them, helped by our extensive community. Our 2021 investment in Groq perfectly demonstrates this approach: a calculated bet on revolutionary AI hardware that has positioned our investors at the forefront of computing’s next paradigm shift: Inference computing.

Early Access: The Technical Edge Most VCs Missed

For the technically inclined: Groq’s Language Processing Unit (LPU) represented a fundamental rethinking of AI acceleration. When we first invested in 2021, their architecture demonstrated 20x lower latency than comparable GPUs for inference workloads — a technical achievement that went largely unnoticed by the mainstream. Back in 2021, few knew what an “inference workload” even was. Early customers then included a quant hedge fund and an automotive company working on autonomous travel.

An AI inference workload refers to the computational tasks involved in running a trained machine learning model to generate predictions or decisions based on new input data. This is the stage after the model has been trained and deployed, where it is actively being used in real-world applications.

In hindsight, this is obvious — at the time, a lot less so.

NVIDIA’s CEO, Jensen Huang, has highlighted the immense scale of AI inference compared to training. In a discussion with Brad Gerstner of Altimeter, when Gerstner noted that inference accounts for about 40% of NVIDIA’s revenue and asked about future expectations, Huang responded, “It’s about to go up a billion times.”

Key Aspects of AI Inference Workloads

- Home

Processing New Data

The model takes real-time or batch input data and processes it to make predictions, classifications, or content. - Home

Optimized for Performance

Unlike training (which is computationally intensive and performed offline), inference needs to be efficient, often requiring low latency and high throughput for real-time applications. - Home

Hardware Acceleration

Inference workloads can run on CPUs, GPUs, TPUs (Tensor Processing Units), or Groq’s LPUs, depending on the complexity and speed requirements. - Home

Deployment Environments

These workloads can run in cloud data centers (e.g., AWS, Google Cloud, Azure), edge devices (e.g., smartphones, IoT devices, autonomous vehicles), or on-premise servers. - Home

Optimization Techniques

To reduce computational costs and latency, techniques such as quantization, pruning, and model distillation are used.

Examples of AI Inference Workloads

- Home

Computer Vision

Identifying objects in images (e.g., facial recognition, medical imaging analysis) - Home

Natural Language Processing

Chatbots, language translation, or sentiment analysis - Home

Autonomous Systems

Real-time decision-making in self-driving cars - Home

Recommendation Systems

Personalized content suggestions (e.g., Netflix, Amazon) - Home

Generative AI

AI-generated text, images, or videos (e.g., ChatGPT, DALL·E)

The Groq Difference

Groq was founded by Jonathan Ross, who previously designed Google’s Tensor Processing Unit (TPU). Ross’ team eliminated the traditional bottlenecks in inference by using a deterministic single-core design rather than the probabilistic multi-core architecture that dominates the market. Read the whitepaper for the deep dive on the technology.

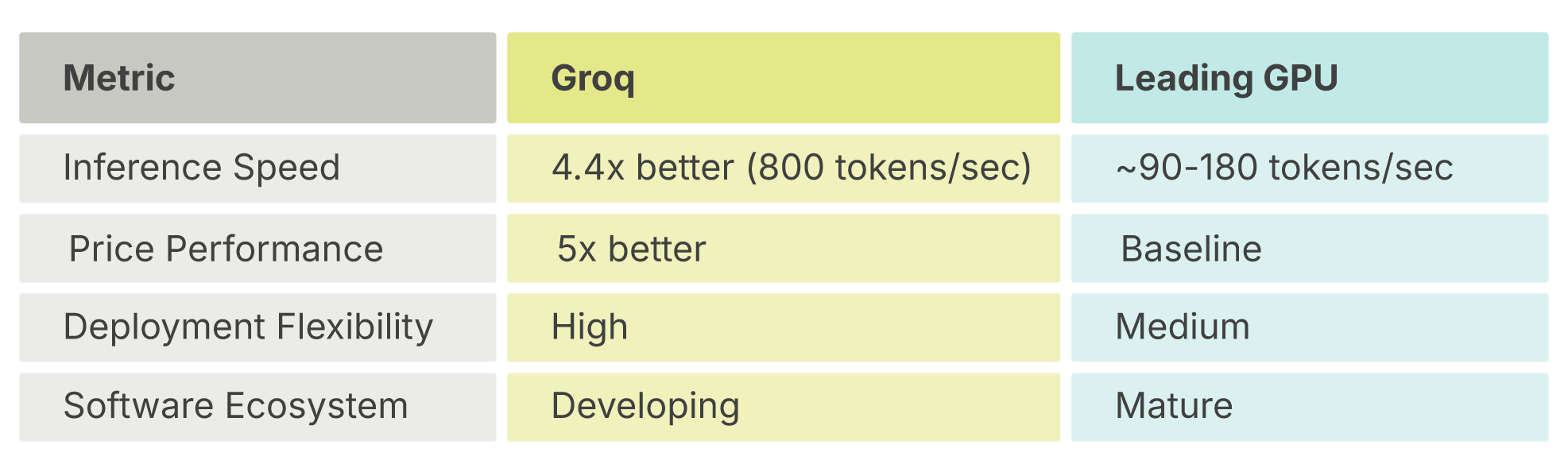

Translated into a performance comparison (mid 2024 metrics – details here), here’s the bottom line:

- Home

Standard GPU

~10-30 tokens/second with variable latency - Home

Groq LPU

275 tokens/second with consistent sub-millisecond latency (9x faster) - Home

Architecture

Fully deterministic execution vs. probabilistic scheduling

This isn’t just incremental improvement—it’s a fundamentally different approach that’s now proving crucial for real-time AI applications.

Being Ahead of the Curve

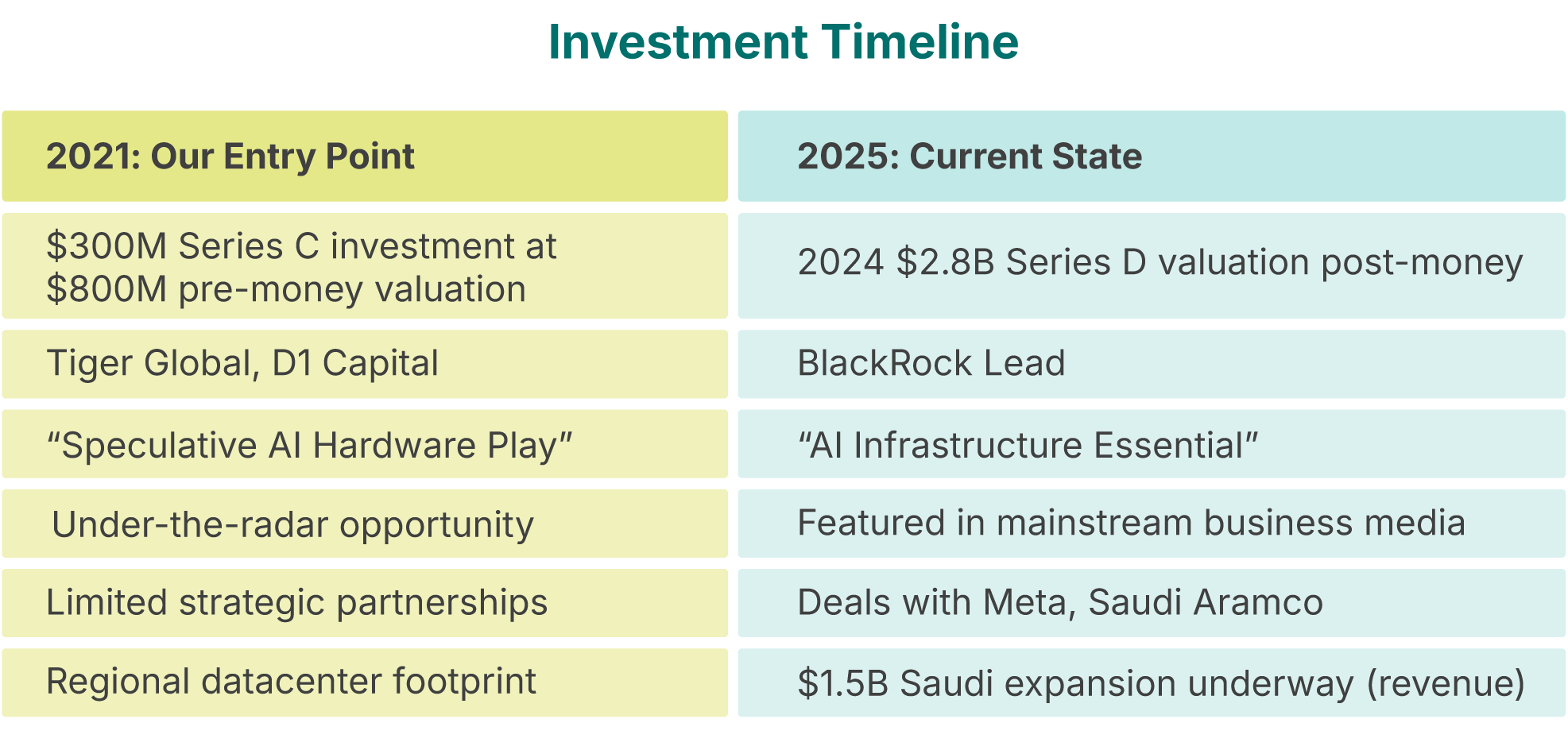

We got to know Groq in early 2019 and were tracking the company since their 2018 Series B round. We mobilized our alumni network to make a strong connection and were able to get allocation in the 2021 Series C.

For Strategic Asset Allocators: The Portfolio Perspective

AI infrastructure represents a crucial allocation within the broader technology ecosystem. Unlike software plays that can face instant competition, hardware innovation creates defensible moats through IP and manufacturing expertise.

Current market context:

- Home

AI inference market CAGR projections

19.2% through 2030 ($106B in 2025) - Home

Total addressable market

$255B by 2030 - Home

Strategic position

"Picks and shovels" play in the AI gold rush - Home

Risk profile

Mid-stage venture with clear product-market fit - Home

Correlation

Low correlation with public markets, high correlation with AI adoption rates

Our $3M 2024 follow-on investment in Series D was a calculated portfolio move, balancing earlier stage investments with a proven technology that’s entering its scaling phase.

For Those Who Seek Exclusive Opportunities: The Inner Circle

What separates truly exceptional investors from the crowd? Access and timing.

Groq’s Series C in 2021 wasn’t broadly marketed. It was a carefully constructed syndicate of investors with deep technical understanding and strategic vision. The participants weren’t just writing checks; they were making a statement about the future of AI infrastructure.

Today, these early backers are regularly featured at invite-only events like:

- HomeThe AI Hardware Summit in Silicon Valley

- HomeSequoia's Annual Deep Tech Forum

- HomeThe Saudi PIF's Future Investment Initiative

This wasn’t luck — it was calculated vision paired with exclusive access. The same networks that provided our entry into Groq continue to surface opportunities in adjacent spaces before they reach more mainstream awareness.

Comparative Analysis: Groq vs. Market Alternatives

For the analytically minded, here’s how Groq positioned against alternatives (see this report from April 2024):

As Groq goes to its next version LPU on 4nm silicon, their inference advantages will continue to increase. Groq announced this partnership with Samsung in 2023 for their next generation hardware.

Our investment thesis centered on Groq’s ability to deliver on both performance and adaptability — a combination that established players couldn’t match and most startups couldn’t execute on. Groq also has an energy advantage over competitors. At an architectural level, Groq LPUs are up to 10X more energy efficient than GPUs. Since energy consumption is one of the biggest cost factors in running AI models, better energy efficiency generally translates to lower costs of operation.

The Path Forward: From Technical Advantage to Market Dominance

Groq’s journey from technical innovation to market adoption follows a familiar pattern we’ve seen in other successful hardware investments:

- Home

Technical breakthrough (2016-2020)

Development of core architecture - Home

Early adopter traction (2021-2022)

Initial enterprise deployments - Home

Market validation (2024)

GroqCloud launch (March 2024) and viral adoption. As of this March 2025 writing, they have signed up over 1 million GroqCloud developers in a year! - Home

Strategic partnerships (2024-2025)

Meta, Cisco, GlobalFoundries, others - Home

Global expansion (2024-2025)

Initial data center set up in December 2024. Then in 2025 they announced the $1.5B Saudi Aramco deal for expansion and international scaling. - Home

Market leadership (2025-)

Infrastructure standardization (underway)

Each of these transitions has expanded Groq’s valuation, with our investors benefiting from positioning at the Series C and D stages — the ideal balance of derisked technology with tremendous growth ahead.

Limited Access: Join The Next Wave Before It Crests

The window for Groq may have narrowed, but our investment thesis — identifying infrastructure plays that enable transformative technologies — continues to surface opportunities.

Three key insights drive our current outlook:

- Home

The AI compute gap is widening

Demand for inference capacity is outpacing supply. Multiple cloud providers have reported waitlists for AI inference capacity in 2023-24, with resources being allocated based on priority rather than meeting all demand. - Home

Specialized architecture wins

One-size-fits-all approaches can't meet the diverse demands of AI workloads - Home

The next bottleneck has emerged

Memory bandwidth and interconnect technologies represent the next frontier

Key Takeaway: Act Ahead of the Curve

The Groq story illustrates a fundamental truth in venture capital: the most significant returns come from positioning ahead of market consensus. For technical visionaries who appreciate architectural innovation, for strategic allocators building exposure to essential infrastructure, and for those who value access to opportunities others simply can’t reach — this is your moment.

Our next investment round in our Deep Tech Fund, or other AV Funds, brings you access to companies addressing these specific challenges. Companies that, like Groq in 2021, are still flying under the radar of mainstream investors.

Learn More About the Deep Tech Fund

We are seeing strong interest in our Deep Tech Fund. We have a limited number of spots, so we recommend securing a spot promptly.

Investors in the fund will have exposure to a portfolio of high-tech game-changers and disruptive business models using advanced science and engineering to tackle the toughest and potentially most lucrative tech challenges.

Max Accredited Investor Limit: 249

Chris Sklarin

Managing Partner, Castor VenturesChris has 30+ years of experience in venture capital, product development, and sales engineering. As an investor, he has deployed over $100 million into companies across all stages, from seed to growth/venture. At AV, Chris has built Castor Ventures from Fund 2 – 9 to over 150 portfolio companies. Prior to Castor, Chris was a Vice President at Edison Partners, where he focused on Enterprise 2.0 and mobile investments. Previously, Chris served as Director of Business Development at a biomedical venture accelerator and at an early-stage venture firm. Earlier in his investing career, as part of JumpStart, a nationally recognized venture development organization, Chris sourced and executed seed-stage investments. Chris received his SB in Electrical Engineering from MIT in 1988 and his MBA from the Haas School of Business at the University of California, Berkeley.

This communication is from Alumni Ventures, a for-profit venture capital company that is not affiliated with or endorsed by any school. It is not personalized advice, and AV only provides advice to its client funds. This communication is neither an offer to sell, nor a solicitation of an offer to purchase, any security. Such offers are made only pursuant to the formal offering documents for the fund(s) concerned, and describe significant risks and other material information that should be carefully considered before investing. For additional information, please see here. Achievement of investment objectives, including any amount of investment return, cannot be guaranteed. Co-investors are shown for illustrative purposes only, do not reflect all organizations with which AV co-invests, and do not necessarily indicate future co-investors. Example portfolio companies shown are not available to future investors, except potentially in the case of follow-on investments. Venture capital investing involves substantial risk, including risk of loss of all capital invested. This communication includes forward-looking statements, generally consisting of any statement pertaining to any issue other than historical fact, including without limitation predictions, financial projections, the anticipated results of the execution of any plan or strategy, the expectation or belief of the speaker, or other events or circumstances to exist in the future. Forward-looking statements are not representations of actual fact, depend on certain assumptions that may not be realized, and are not guaranteed to occur. Any forward-looking statements included in this communication speak only as of the date of the communication. AV and its affiliates disclaim any obligation to update, amend, or alter such forward-looking statements, whether due to subsequent events, new information, or otherwise.